Semigroup and Aggregate

Whats on the menu

Parse data from a CSV file

Group and aggregate

Minimize mechanical and algorithmic code

Leverage type classes and GHC genericsDataset

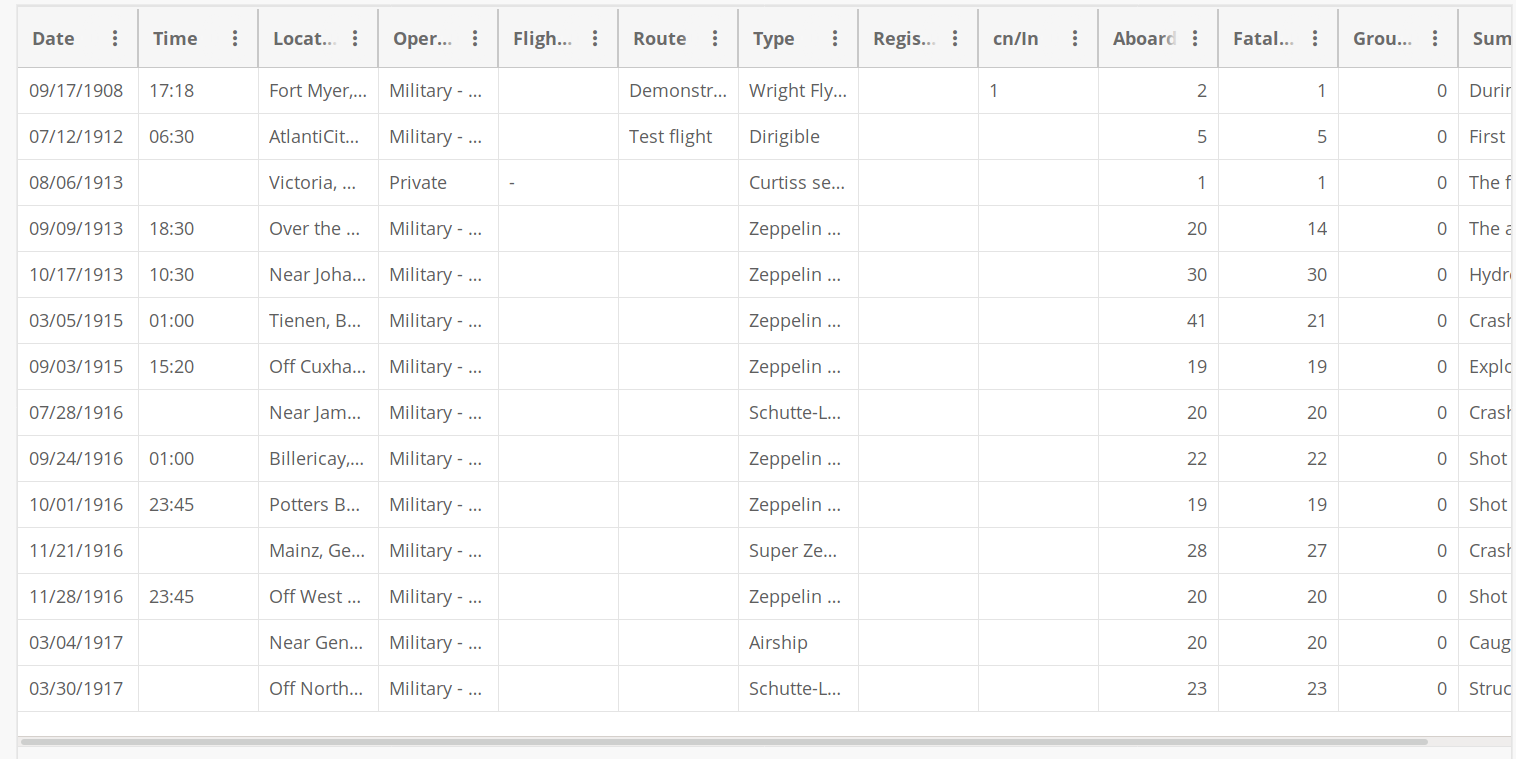

The dataset we are going to work with is airplane crashes since 1908.

- It was originally from here: https://dev.socrata.com/foundry/opendata.socrata.com/q2te-8cvq

- But can still be downloaded from here: https://data.world/data-society/airplane-crashes

Why avoid writing code

Every line we write is a potential bug

Every line we write has to be maintained

Repetitive and boilerplate code obfuscates intent

How can we avoid writing code

Type Classes give ad hoc polymorphism

Generics give you data-type generic programming

Generics + Type Classes + library authors == code for free

Type Classes + laws == correctness for free

GHC can derive many type classes for you

How to parse the CSV

Luckily for us we can use the cassava library to parse CSV data.

and cassava does support handling it opaquely,

but it also supports and encourages parsing it into custom data types.

Parsing with cassava

To parse a column we need an instance for

But luckily many types already have instances.

Parsing with cassava

To parse a row we need an instance for

We could implement it manually e.g.

Parsing our data

If we look at the CSV then we are interested in parsing the following columns:

- Date

-

The date of the crash e.g.

09/17/1908 - Location

-

Where the crash took place e.g.

Fort Myer, Virginia - Operator

-

Who the operator of the plane was e.g.

Military - U.S. Army - Flight #

-

The flight number associated with the aircraft e.g.

2L272 - Type

-

The type of the aircraft e.g.

Wright Flyer III - Aboard

- The number of passengers on board the aircraft.

- Fatalities

- The total number of fatalities due to the crash.

- Ground

- The number of fatalities non passengers, people that were on the ground.

Parsing our data

We will use newtypes to tag the column data so that its

clear which columns we are working with.

They are zero cost at compile time.

They are low cost at devloper time since we can access the underlying

type’s instances via GeneralizedNewtypeDeriving.

Parsing our data

-- the location column wraps "Text" and parses using its instance

newtype Location = Location {getLocation :: Text}

deriving (FromField, Show, Read, Eq, Ord)

-- the operator column wraps "Text" and parses using its instance

newtype Operator = Operator {getOperator :: Text}

deriving (FromField, Show, Read, Eq, Ord)

-- the flight number column wraps "Text" and parses using its instance

newtype FlightNumber = FlightNumber {getFlightNumber :: Text}

deriving (FromField, Show, Read, Eq, Ord)

-- the A/C type column wraps "Text" and parses using its instance

newtype AcType = AcType {getAcType :: Text}

deriving (FromField, Show, Read, Eq, Ord)

-- the passengers column wraps "Int" and parses using its instance

newtype Passengers = Passengers {getPassengers :: Int}

deriving (FromField, Show, Read, Eq, Ord, Num)

-- the fatalities column wraps "Int" and parses using its instance

newtype Fatalities = Fatalities {getFatalities :: Int}

deriving (FromField, Show, Read, Eq, Ord, Num)

-- the ground fatalities column wraps "Int" and parses using its instance

newtype GroundFatalities = GroundFatalities {getGroundFatalities :: Int}

deriving (FromField, Show, Read, Eq, Ord, Num)

-- Our date column wraps "Day" but parses as Y/M/D from the CSV

newtype Date = Date {getDay :: Day}

deriving (Eq, Ord, Show, Read)

-- customize the CSV parser for the date

instance FromField Date where

parseField =

parseField

>=> ((Date <$>) . parseTimeM True defaultTimeLocale "%m/%d/%Y")Parsing our data

-- the data type that represents a row in the CSV that we will parse

data CsvRow = CsvRow

{ date :: Date

, location :: Location

, operator :: Operator

, flightNumber :: Maybe FlightNumber

, acType :: AcType

, aboard :: Maybe Passengers

, fatalities :: Maybe Fatalities

, ground :: Maybe GroundFatalities

}

deriving (Generic)-- We define how to parse a CSV file making use of "genericParseNamedRecord"

-- to do this automatically for us because "CsvRow" is an instance of "Generic"

-- we only customized the definition of the labels

instance FromNamedRecord CsvRow where

parseNamedRecord =

genericParseNamedRecord

defaultOptions

{ fieldLabelModifier =

\case

"flightNumber" -> "Flight #"

"acType" -> "Type"

h : t -> Char.toUpper h : t

[] -> []

}Crashes per aicraft type

As a first statistic lets calculate the crashes per aircraft type.

We can use a newtype again to represent crashes.

As a first attempt we might come up with something like.

But its not really clear from our type that there can’t be any

duplicate aircraft and we are discarding the Map which

seems wasteful.

Crashes per aicraft type

But the result of a succesful parse from cassava is not a

[CsvRow] but a Vector CsvRow

We could fix it by doing the following.

Crashes per operator

Next lets calculate crashes per operator.

But this looks almost exactly the same as crashes per aircraft.

We fix it by extracting the common bit to a helper.

crashesPer :: (Foldable t, Ord key) => (CsvRow -> key) -> t CsvRow -> Map key Crashes

crashesPer toKey = Map.fromListWith (+) . map toTuple . toList

where

toTuple a = (toKey a, 1)

crashesPerAcType5 :: Foldable t => t CsvRow -> Map AcType Crashes

crashesPerAcType5 = crashesPer acType

crashesPerOperator2 :: Foldable t => t CsvRow -> Map Operator Crashes

crashesPerOperator2 = crashesPer operatorNumber of crashes and date of last crash

Lets modify our helper to be more general

Its a shame that we have to destructure the tuple, combine the elements and then recreate it.

Surely we can do better.

Monoid & Semigroup

A Monoid is a type with an associative binary operation that has an identity,

The binary operator is

called <> and

The identity is called

mempty and

x <> mempty = x right identitymempty <> x = x left identityx <> (y <> z) = (x <> y) <> z

associativityIts +

with 0, * with 1, ++

with []

A Semigroup is Monoid

without mempty

Number of crashes and date of last crash

But isn’t a Map a

Monoid

So can’t we then

No we can’t because notice that there is no Semigroup /

Monoid constraint on Map’s value.

It overwrites the values and does not aggregate them.

MonoidalMap to the rescue

Luckily MonoidalMap map exists and it does have the

correct constraint on its Monoid instance.

So we can do

More stats less tuples

Next we want to collect several statistics.

We could use an N-tuple.

It would be much nicer if we could use a record.

Does this mean we have to manually define a semigroup instance for our record?

We can use the generic-data library and

DerivingVia to automaticallyderive a

Semigroup instance for our record.More stats less tuples

-- a data type for the statistic we want to record per row type

data Stats = Stats

{ statFirst :: !(Semigroup.Min Date) -- lower bound Date

, statLast :: !(Semigroup.Max Date) -- upper bound Date

, statFatalities :: !(Monoid.Sum Fatalities) -- sum of Fatalities

, statCrashes :: !(Semigroup.Sum Crashes) -- sum of Crashes

}

deriving (Generic, Show)

deriving (Semigroup) via (Generically Stats)

toStats :: CsvRow -> Stats

toStats a =

Stats

{ statFirst = Semigroup.Min (date a)

, statLast = Semigroup.Max (date a)

, statFatalities = maybe mempty Monoid.Sum (fatalities a)

, statCrashes = Semigroup.Sum 1

}Taking it for a spin

Now we can ask all kinds of interesting questions.

Taking it for a spin

putStrLn "4 operators most likely to crash"

pPrint $

take 4 $

sortOn (Down . statCrashes . snd) $

MonoidalMap.toList $

groupAggregate operator toStats rows4 operators most likely to crash

[ ( Operator { getOperator = "Aeroflot" }

, Stats

{ statFirst = Min { getMin = Date { getDay = 1946-12-04 } }

, statLast = Max { getMax = Date { getDay = 2008-09-14 } }

, statFatalities =

Sum { getSum = Fatalities { getFatalities = 7156 } }

, statCrashes = Sum { getSum = Crashes { getCrashes = 179 } }

}

)

, ( Operator { getOperator = "Military - U.S. Air Force" }

, Stats

{ statFirst = Min { getMin = Date { getDay = 1943-08-07 } }

, statLast = Max { getMax = Date { getDay = 2005-03-31 } }

, statFatalities =

Sum { getSum = Fatalities { getFatalities = 3717 } }

, statCrashes = Sum { getSum = Crashes { getCrashes = 176 } }

}

)

, ( Operator { getOperator = "Air France" }

, Stats

{ statFirst = Min { getMin = Date { getDay = 1933-10-31 } }

, statLast = Max { getMax = Date { getDay = 2009-06-01 } }

, statFatalities =

Sum { getSum = Fatalities { getFatalities = 1734 } }

, statCrashes = Sum { getSum = Crashes { getCrashes = 70 } }

}

)

, ( Operator { getOperator = "Deutsche Lufthansa" }

, Stats

{ statFirst = Min { getMin = Date { getDay = 1926-07-24 } }

, statLast = Max { getMax = Date { getDay = 1945-04-21 } }

, statFatalities =

Sum { getSum = Fatalities { getFatalities = 396 } }

, statCrashes = Sum { getSum = Crashes { getCrashes = 65 } }

}

)Taking it for a spin

putStrLn "4 aircraft types most likely to crash"

pPrint $

take 4 $

sortOn (Down . statCrashes . snd) $

MonoidalMap.toList $ groupAggregate acType toStats rows4 aircraft types most likely to crash

[ ( AcType { getAcType = "Douglas DC-3" }

, Stats

{ statFirst = Min { getMin = Date { getDay = 1937-10-06 } }

, statLast = Max { getMax = Date { getDay = 1994-12-15 } }

, statFatalities =

Sum { getSum = Fatalities { getFatalities = 4793 } }

, statCrashes = Sum { getSum = Crashes { getCrashes = 334 } }

}

)

, ( AcType

{ getAcType = "de Havilland Canada DHC-6 Twin Otter 300" }

, Stats

{ statFirst = Min { getMin = Date { getDay = 1972-12-21 } }

, statLast = Max { getMax = Date { getDay = 2008-10-08 } }

, statFatalities =

Sum { getSum = Fatalities { getFatalities = 796 } }

, statCrashes = Sum { getSum = Crashes { getCrashes = 81 } }

}

)

, ( AcType { getAcType = "Douglas C-47A" }

, Stats

{ statFirst = Min { getMin = Date { getDay = 1944-06-06 } }

, statLast = Max { getMax = Date { getDay = 1996-12-09 } }

, statFatalities =

Sum { getSum = Fatalities { getFatalities = 609 } }

, statCrashes = Sum { getSum = Crashes { getCrashes = 74 } }

}

)

, ( AcType { getAcType = "Douglas C-47" }

, Stats

{ statFirst = Min { getMin = Date { getDay = 1942-10-01 } }

, statLast = Max { getMax = Date { getDay = 2000-09-02 } }

, statFatalities =

Sum { getSum = Fatalities { getFatalities = 1046 } }

, statCrashes = Sum { getSum = Crashes { getCrashes = 62 } }

}

)

]Taking it for a spin

putStrLn "4 deadliest locations"

pPrint $

take 4 $

sortOn (Down . statFatalities . snd) $

MonoidalMap.toList $

groupAggregate location toStats rows4 deadliest locations

[ ( Location { getLocation = "Tenerife, Canary Islands" }

, Stats

{ statFirst = Min { getMin = Date { getDay = 1965-12-07 } }

, statLast = Max { getMax = Date { getDay = 1980-04-25 } }

, statFatalities =

Sum { getSum = Fatalities { getFatalities = 761 } }

, statCrashes = Sum { getSum = Crashes { getCrashes = 3 } }

}

)

, ( Location

{ getLocation = "Mt. Osutaka, near Ueno Village, Japan" }

, Stats

{ statFirst = Min { getMin = Date { getDay = 1985-08-12 } }

, statLast = Max { getMax = Date { getDay = 1985-08-12 } }

, statFatalities =

Sum { getSum = Fatalities { getFatalities = 520 } }

, statCrashes = Sum { getSum = Crashes { getCrashes = 1 } }

}

)

, ( Location { getLocation = "Moscow, Russia" }

, Stats

{ statFirst = Min { getMin = Date { getDay = 1952-03-26 } }

, statLast = Max { getMax = Date { getDay = 2007-07-29 } }

, statFatalities =

Sum { getSum = Fatalities { getFatalities = 432 } }

, statCrashes = Sum { getSum = Crashes { getCrashes = 15 } }

}

)

, ( Location { getLocation = "Near Moscow, Russia" }

, Stats

{ statFirst = Min { getMin = Date { getDay = 1935-05-18 } }

, statLast = Max { getMax = Date { getDay = 2001-07-14 } }

, statFatalities =

Sum { getSum = Fatalities { getFatalities = 364 } }

, statCrashes = Sum { getSum = Crashes { getCrashes = 9 } }

}

)

]Lets parse the data in constant space

We can use cassava’s streaming decoding support to decode our CSV file in constant space because our helper was so general.

statsFromFile :: Ord key => FilePath -> (CsvRow -> key) -> IO (MonoidalMap key Stats)

statsFromFile pathToFile toKey = do

csvData <- ByteString.Lazy.readFile pathToFile

either fail (pure . groupAggregate toKey toStats . snd) $ Data.Csv.Streaming.decodeByName csvData

main :: IO ()

main = do

let statsFromFile' = statsFromFile "./Airplane_Crashes_and_Fatalities_Since_1908.csv"

putStrLn "4 operators most likely to crash"

pPrint

. take 4

. sortOn (Down . statCrashes . snd)

. MonoidalMap.toList

=<< statsFromFile' operator

putStrLn "4 aircraft types most likely to crash"

pPrint

. take 4

. sortOn (Down . statCrashes . snd)

. MonoidalMap.toList

=<< statsFromFile' acType

putStrLn "4 deadliest locations"

pPrint

. take 4

. sortOn (Down . statFatalities . snd)

. MonoidalMap.toList

=<< statsFromFile' locationLets parse the data in constant space and in parallel

If our data was distributed over multiple large files we can easily

process them in parallel because Semigroup is essentially

what you need to do a map reduce.

statsFromFiles :: Ord key => [FilePath] -> (CsvRow -> key) -> IO (MonoidalMap key Stats)

statsFromFiles pathToFiles toKey = fmap mconcat $ runConcurrently $ traverse from1File pathToFiles

where

from1File pathToFile = Concurrently do

csvData <- ByteString.Lazy.readFile pathToFile

either fail (pure . groupAggregate toKey toStats . snd) $ Data.Csv.Streaming.decodeByName csvData